The 2016 presidential election was plagued with fake news, as foreign adversaries used disinformation to sow discord among the U.S. electorate. Now, fake news is becoming a potentially more dangerous threat, with new technology making it easier than ever before to manipulate video and audio recordings. These videos, called deepfakes, are so realistic that it is difficult to detect that they’re a forgery. So, if 2016 was all about fake news, will 2020 be the election of deepfakes?

Only one election cycle since Russia’s interference through hacks and fake social media accounts, experts say our new batch of presidential candidates will likely face doctored videos created by artificial intelligence technologies that can make them say or do almost anything.

An altered video of House Speaker Nancy Pelosi (D-CA) posted to Facebook on May 23 was edited to make the congresswoman appear drunk during a news conference, and had been viewed over 2.5 million times before it was flagged as fake. Following a war of words between President Donald Trump and Pelosi, Trump waded into the controversy, sharing a clip from Fox News that compiled moments where she stumbled over her words.

While the doctored Pelosi video was not a product of the advanced technologies used in deepfakes, the video begged the question of whether these sophisticated altered videos would pose a threat to the 2020 election.

According to an article in Foreign Affairs, co-authored by former CIA Acting Director Michael Morell, “Russian disinformation ahead of the 2016 election pales in comparison to what will soon be possible with the help of deepfakes – digitally manipulated audio or video material designed to be as realistic as possible.”

Fabrice Pothier, a senior advisor for the Transatlantic Commission on Election Integrity, told The Hill that foreign actors could use fake videos “to sow distrust” and discredit candidates.

While the Pelosi video is an inferior one, Pothier noted that more advanced deepfakes are quickly approaching. “We are still at a rudimentary stage – the Pelosi video is a basic alteration of an authentic video,” he says. “But at this pace, it is only a matter of time before fully synthetic video and audio files, or deepfakes, generated by algorithm rather than with video editing tools, contaminate our information sphere.”

The video is the latest example of the growing ease with which individuals can alter content to disrupt the democratic process. Deepfakes could become weaponized, with candidates using them to assassinate their opponent’s character – which isn’t hard to consider since Trump shared an altered video of Pelosi in an attempt to illustrate that she had “lost it.” This is particularly concerning in a highly polarized climate where voters arguably believe all they’re told by the candidates they support.

Targets of these deepfakes would also face the daunting task of proving that the incident depicted didn’t happen. Meanwhile, a candidate caught in a compromising circumstance could claim the footage was forged.

These altered videos could pose a threat if they are dispersed right before polls open. Politicians have already begun sounding the alarm on this possibility, including Sen. Marco Rubio (R-FL), who, during a Senate Intelligence Committee hearing last May said he believed deepfakes would be used in “the next wave of attacks against America and Western Democracies.” Rubio described a situation where deepfakes are posted online immediately before an election, with the fake video going viral and being reported on before it was proven to be a hoax.

The concern is that even if individuals are later persuaded that a video they watched is forged, they won’t be able to completely eliminate the lingering negative impression left by the video, and could even be psychologically influenced by it.

“Even if you can disprove a fake video, if you can show it’s a forgery, the negative impression watching it… you can never erase,” Rep. Adam Schiff (D-CA) told reporters, according to Reuters.

Or people may refuse to believe that the video is actually fake, and may call efforts to discredit the video a conspiracy.

While deepfakes are not yet pervasive in the United States, intelligence officials are concerned it’s only a matter of time before foreign adversaries use them. In a worldwide threat assessment by the Senate Intelligence Committee in January, Director of National Intelligence Dan Coats warned that “adversaries and strategic competitors probably will attempt to use deepfakes or similar machine-learning technologies to create convincing – but false – image, audio, and video files to augment and influence campaigns directed against the United States and our allies and partners.”

As this fresh crop of presidential candidates nears 2020, researchers are working to combat deepfakes by using automated tools.

Hany Farid, a professor and image forensics expert at Dartmouth College, is building “soft biometric models” to distinguish an individual from a fake version of themselves, according to CNN Business. He and other experts have poured over hours of videos of all the presidential candidates, mapping out their unique talking styles to juxtapose against these altered videos. The software will be able to detect political deepfakes and potentially authenticate genuine videos labelled as forgeries.

Citing former President Barack Obama’s speaking style, Farid pointed to a forged viral video BuzzFeed released last year in which Obama made a number of questionable comments when it was actually filmmaker Jordan Peele speaking.

“[There’s a] link between what Obama says and how he says it, and we build what we call soft biometrics that we then can [use to] analyze a deepfake and say, ‘Oh, in that video, the mouth, which is synthesized to be consistent with Jordan Peele’s voice, is in some ways decoupled from the rest of the head. It’s physically not correct,’” Farid said.

But in this deepfakes arms race, the technology is advancing each day. As experts develop ways to spot these fake videos, deepfake creators are quickly finding ways to make them increasingly realistic.

“I think the challenge is that it is easier to create manipulated images and video and that can be done by an individual now,” Matt Turek, program manager at the Defense Advanced Research Projects Agency under the U.S. Defense Department, told ABC News in December. “Manipulations that may have required state-level resources, or several people and a significant financial effort, can now potentially be done at home.”

With the technology readily available and these fake videos becoming harder to detect, voters will need to become better detectives and work harder to discern fact from fiction.

One way to tell truth from deepfake is to “refrain from jumping to strong conclusions in either direction without additional information and confirmation,” Farid told BuzzFeed News. Consider the source where the content originated from and whether more information has surfaced regarding the video, such as in news outlets. Also, watch the video frame-by-frame to see if there are glitches, like with the subject’s mouth, as deepfakes often struggle “to accurately render the teeth, tongue, and mouth interior. So, keep a watchful eye on the mouth for [visual anomalies].”

Since deepfakes could pose a real threat to the 2020 election, it’s imperative to remain vigilant to ensure they won’t negatively impact the democratic process.

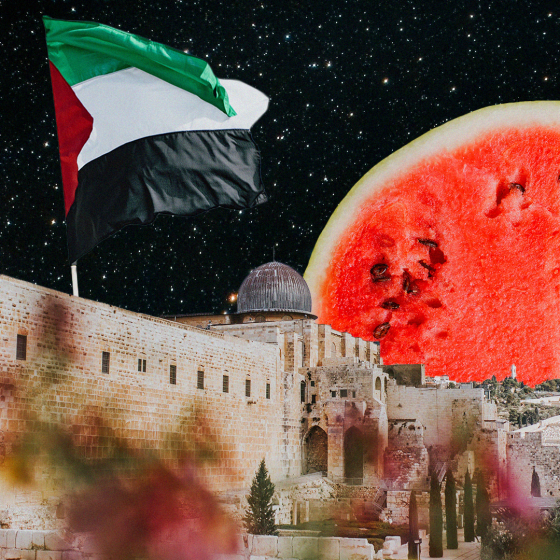

Artwork by Esme Rose Marsh